Lab 3 - Structure from Motion

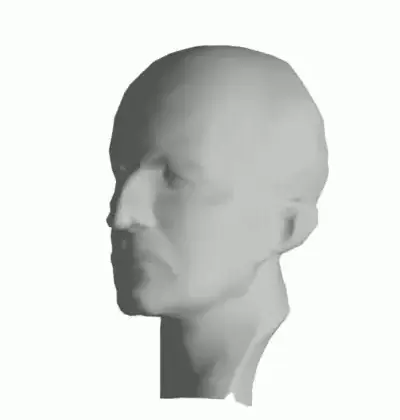

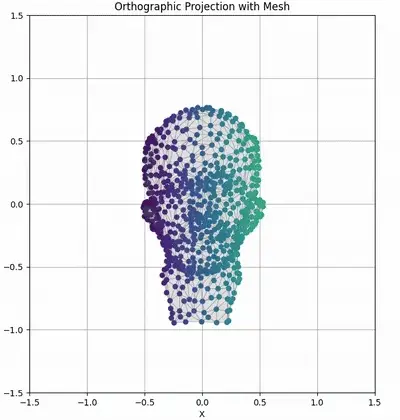

Middle: Sequence of tracked feature points on ground truth mesh viewed from an orthographic camera.

Right: Fill matrix visualization showing gradual accumulation of observations across frames (shaded entries are known image coordinates).

Overview

In this assignment you will implement an affine factorization method for structure from motion. You will then extend it to handle missing data (occlusions) via matrix completion, and perform a metric upgrade to recover the true Euclidean structure. You will test your implementation on provided datasets and on your own videos, and document your results with code, figures, and analysis.

Important: You can obtain 2 bonus marks for each section you finish and demo while present during the lab period we introduce the lab.

Lab Introduction Slides: available here

Prelab Questions (5%)

The link to the prelab questions can be found here. You will need your University of Alberta email for access; they are also available under the Canvas assignment. Due Tuesday, March 3rd at 5pm.

Setup

First, download the lab code and report templates. These contain the structure for your report, and the file structure for your code. You can write your code however you wish within the files; however, please ensure that it is adequately commented, and that each part of the lab can be run by running the file corresponding to that question.

You will need to use your University of Alberta email to access the below templates.

Code template: can be found here.

Report template: can be found here.

Lab questions will be available before the introductory session.

Submission Details

- Include accompanying code used to complete each question. Ensure they are adequately commented.

- Ensure all functions are and sections are clearly labeled in your report to match the tasks and deliverables outlined in the lab.

- Organize files as follows:

-

code/folder containing all scripts used in the assignment. -

media/folder for images, videos, and results.

-

- Final submission format: a single zip file named

CompVisW26_lab3_lastname_firstname.zipcontaining the above structure. - Your report for Lab 3 is to be submitted at a later date. The report contains all media, results, and answers as specified in the instructions above. Ensure your answers are concise and directly address the questions.

- Total marks for this lab is 100 for all students. Your lab assignment grade with bonus marks is capped at 110%.